A real highlight this week was a talk by Luke Church and Sharath Srinivasan of Africa’s Voices Foundation. It is a rare delight to see a genuinely interdisciplinary presentation, combining computer science and social science perspectives – and to have speakers recognise that the audience likely understood terms such as ‘integrity’ very differently.

Both speakers brought critical reflections on their own fields. Luke spoke of the moral toxicity of the tech industry; Sharath of how, to scale social research, practitioners shift the interpretive burden to others, ‘instrument’ research subjects, or abstract away the inescapable role of interpretation, and take data as fact. Social science methods are political, material, self-interested and pragmatic. On the other hand, tech and data methods (at least as practised by most of the tech industry) are covertly political, essentialist, capitalist. Such methods seek to reduce or clean reality into formalisms; and then reify the formalised representation. They take the world, simplify it, claim the simplification is the essence of the world, and then we wield it back. Reality becomes what can be expressed to a computer. (And this is rather tough, as computer scientists know well that computers understand nothing.)

I appreciated the concept of the ‘death of social sciences’ – now everyone is a data collector, an analyst, a researcher. Everyone is an expert, or no one is; or perhaps only ‘the kings of computation’ are.

With these critical perspectives, we can imagine an interdisciplinary frame which could take the worst of both worlds. You could place a lower burden on research subjects through surveillance and datafication, relieve the interpretative burden by scaling pattern detection, and become complicit in how knowledge construction like this shapes resource allocation and power. (This is to some extent how much of the humanitarian sector is thinking about data technologies – they are amassing data points, and hoping that magic will happen so that this will enable good things for their beneficiaries.)

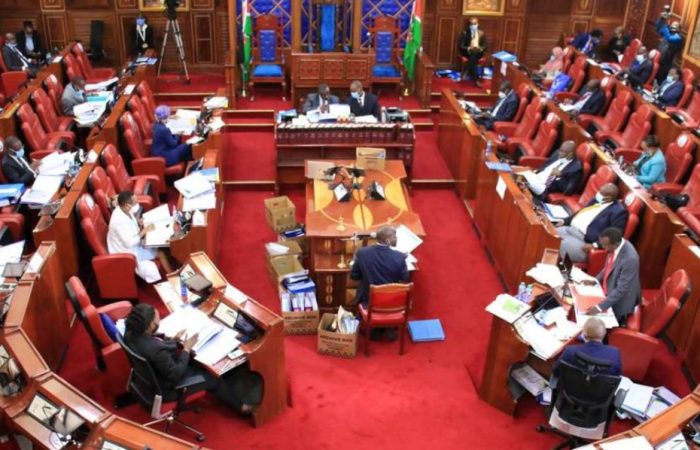

Luckily, AVF does not do this 🙂 Instead, Luke and Sharath talked about interdisciplinary work to create a common social accountability platform, which maximises the chance that citizen perspectives are heard in decisions that affect their lives. Today’s humanitarian and development surveys are shaped by the sector, programme or mandate involved – so a local resident may be asked today to talk about resilience, tomorrow to talk about durable solutions (both humanitarian sector terms), because of the structures and politics of the aid organisations. The AVF vision of citizen engagement and evidence for strengthening social accountability is about connecting people with authorities in ways that make sense to them. They are finding that this has value – their platform was used in Mogadishu and it turned out people thought crowdfunding (broadly understood) was a good way to tackle some issues, but no aid organisation working in, say, shelter or livelihoods would have been able to ask an open question which would have found this idea.

They use local radio combined with SMS or other messaging. Engaging citizens on their own terms as social agents carries burdens – you have to go where they are, curate collective discussion they value – and so this sort of method has a very different cost to extractive data collection and surveillance. If you do it well, you get lots of messy textual data, which you need to understand. AVF use complex mixed methods to give this material expression to influence decision-makers. It’s not just a conventional technical solution, because “data science senses voices, it doesn’t listen to them”.

The AVF perspective on ethical use of AI is that interpretation of data matters, and should be done by people; that it’s about augmenting, not automating labour; and that technologies should be designed for doubt, and for curiosity. It is better, therefore, to get people to do overt interpretation of opinion information (the messy text data), than to have machines doing covert politics with it. So machine learning/AI is used to augment the interpretative capacities of human researchers, with explicit representation of structural and value uncertainty. Provenance tracing is used to maintain authenticity. The AI helps the data processing to be both human, and to work at larger scale than would be possible without it, supporting the human work of research analysis.

The result is that AVF are using Ai to help build engagement spaces, with rigorous and timely qualitative evidence for interpretative insight by humans for decisions that matter at scale. These spaces are justified by people who feel agency over the decisions which affect them, as well as valuing the discussion itself. The research creates a different sense of social-embodied knowing, where we feel and understand differently because human authenticity is retained.

The post was originally published in Dr Laura James’s personal blog.

Dr James is an Entrepreneur in Residence at Cambridge Computer Lab.

Follow her on Twitter: @LaurieJ